Language models (LLMs) have become a cornerstone of artificial intelligence, demonstrating remarkable capabilities in generating human-like text. They are employed in various applications, from chatbots to automated content creation.

However, while LLMs exhibit impressive prowess, they have inherent limitations that necessitate innovative solutions to enhance their performance.

IMAGE: UNSPLASH

Limitations Of Traditional Language Models

Despite their strengths, traditional LLMs often struggle with data limitations and contextual challenges. These models rely on static knowledge bases, which can become outdated quickly. Additionally, they may lack the nuanced understanding required for complex queries, resulting in responses that miss the mark in terms of relevance and accuracy.

Why LLMs Are Not Enough

Data Limitations

LLMs are trained on large datasets, but these datasets are finite. As new information emerges, models trained on older data can become obsolete, leading to responses that do not reflect the latest knowledge.

Contextual Challenges

LLMs may fail to grasp the full context of a query, especially if it involves specialized or uncommon knowledge. This can result in generic or partially accurate responses that do not fully satisfy the user’s needs.

Static Knowledge Bases

Traditional LLMs rely on a fixed set of information. Once trained, their knowledge does not update unless they are retrained, which is a resource-intensive process. This static nature limits their ability to provide up-to-date information.

Why Rag?

Addressing Data Scarcity

Retrieval-Augmented Generation (RAG) addresses the issue of data scarcity by dynamically fetching relevant information from external sources. This ensures that the model’s responses are based on the most current data available.

Enhancing Contextual Understanding

By integrating retrieval mechanisms, RAG enhances the model’s ability to understand and process complex queries. It allows the model to pull in specific pieces of information that are directly relevant to the user’s question, thereby improving the accuracy and relevance of the responses.

Dynamic Knowledge Integration

RAG enables models to dynamically integrate new knowledge without the need for retraining. This makes them more adaptable and capable of providing real-time, accurate information.

How It Works

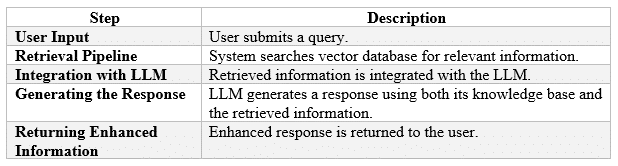

User Input

The process begins when a user inputs a query into the system. This query can be a question, a request for information, or any other form of textual input that requires a response.

Retrieval Pipeline

Once the user input is received, the retrieval pipeline is activated. This involves searching a vector database to find the most relevant documents or pieces of information related to the query.

Vector Database

A vector database stores information in a way that allows for efficient similarity searches. Each piece of information is represented as a vector, and the system can quickly retrieve the vectors that are most similar to the user’s query.

Integration With LLM

The retrieved information is then sent to the language model. The LLM processes this information, integrating it with its own knowledge base to generate a comprehensive response.

Generating The Response

With the integrated data, the LLM generates a response that is both contextually accurate and up-to-date. This response is designed to address the user’s query as precisely as possible.

Returning Enhanced Information

The final step is to return the enhanced response to the user. This response is not only more accurate but also enriched with relevant, real-time information.

Step-By-Step Process

Why It’s Needed?

Improving Accuracy

RAG significantly improves the accuracy of responses by supplementing the LLM’s knowledge with up-to-date, relevant information. This leads to answers that are more precise and reliable.

Enhancing Relevance

By pulling in specific data that directly addresses the user’s query, RAG enhances the relevance of the responses. Users receive information that is directly pertinent to their needs, improving satisfaction and utility.

Enabling Real-Time Updates

RAG allows for real-time integration of new information, ensuring that the model’s responses reflect the latest data and developments. This capability is crucial in fast-moving fields where information changes rapidly.

Applications Of RAG

Customer Support

In customer support, RAG can provide accurate and up-to-date responses to customer inquiries, reducing response times and improving the quality of support.

Content Creation

For content creators, RAG offers a way to generate relevant and informed content quickly, drawing from the latest available information.

Research Assistance

Researchers can benefit from RAG by obtaining accurate, current information that aids in their studies and projects, making their work more effective and efficient.

Future Prospects

Technological Advancements

As technology advances, RAG will continue to evolve, becoming even more efficient and capable. These advancements will further enhance the capabilities of LLMs, making them more powerful tools.

Potential Challenges

Despite its advantages, RAG faces challenges such as ensuring the quality of retrieved information and managing the computational resources required for real-time retrieval and integration. Addressing these challenges will be critical for the continued success of RAG.

Conclusion

RAG offers a significant enhancement to traditional LLMs by improving accuracy, relevance, and real-time information integration. It addresses many of the limitations of conventional models, making AI more effective and responsive.

The integration of RAG into more applications and the continuous improvement of the technology will drive the future of AI, making it an indispensable tool in various fields.

Key Takeaways

- RAG enhances traditional LLMs by addressing data limitations and contextual challenges.

- It integrates dynamic, real-time information, improving the accuracy and relevance of responses.

- RAG has applications in customer support, content creation, and research assistance.

- Future advancements and addressing current challenges will be crucial for the success of RAG.

IMAGE: UNSPLASH

If you are interested in even more technology-related articles and information from us here at Bit Rebels, then we have a lot to choose from.

COMMENTS