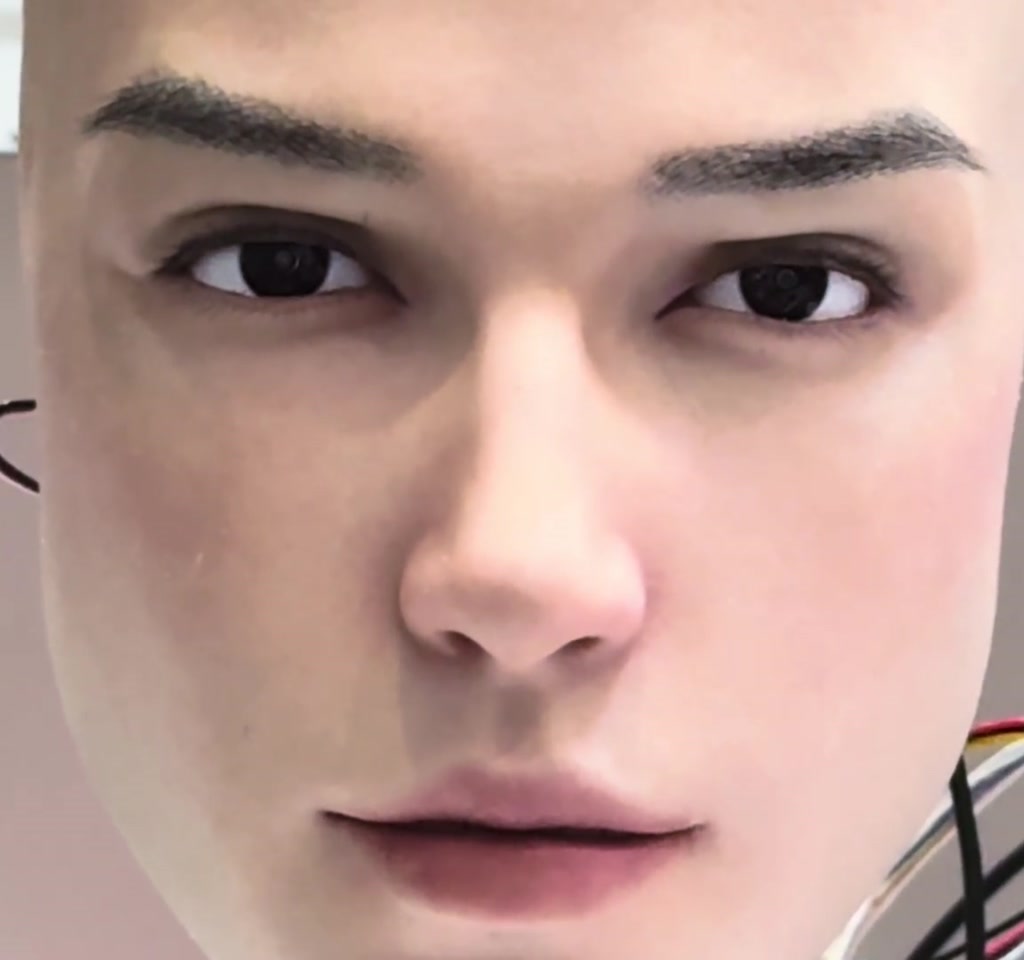

The viral clip of the AheadForm Origin M1 does one thing very efficiently: it convinces you that a machine is looking back. Within seconds the robot blinks, shifts gaze, and makes tiny facial adjustments the human visual system reads as intention. That immediate psychological presence is the design goal and the test.

The real significance is not only appearance but the deliberate choice to prioritize facial expressiveness over walking, grasping, or full body autonomy. That choice exposes the core tradeoffs that will decide whether lifelike heads become practical social tools or costly curiosities.

AheadForm Origin M1 Video Overview

Direct Answer: The clip is a focused display of facial actuation: coordinated blinks, eye tracking motions, and small expression shifts. The footage does not show speech, locomotion, or live two-way interaction, so it demonstrates presence and mechanical control rather than conversational or autonomous operation.

What The Viral Video Actually Shows

The footage centers on a head unit identified by the company. The clip demonstrates blinking, coordinated eye movement, and subtle expression shifts. There is no speech, no full-body movement, and no real-time interaction demonstrated with a human subject on screen.

Direct Answer: The demo highlights synchronized eyelid control and gaze transitions. It is a short, controlled sequence intended to showcase facial motion mechanics rather than to prove long-duration reliability, speech synchronization, or robust perception in unconstrained environments.

How The Origin M1 Is Built

Direct Answer: The platform pairs dense microactuation with sensing and control software. Mechanical design, actuator routing, and timing-sensitive software are all required to make small motions read as natural, and each domain introduces calibration and reliability demands that short clips do not reveal.

Actuators And Mechanics

AheadForm describes the head as integrating a facial actuation system with up to 25 micro motors, framed as artificial facial muscles. Multiple independent actuators allow eyelid control, horizontal and vertical gaze, and small muscle-like movements to combine into natural transitions.

The actuator count is the clearest engineering signal here: 25 actuators is modest compared to a whole humanoid skeleton but dense for a single head. That density raises mechanical complexity, routing, and calibration challenges that remain unseen in a short demo clip.

Sensing And Software

AheadForm states the platform supports coordinated eye movement and synchronized speech and that it interprets non verbal cues. The video does not show these perception or language functions in action, so these remain company positioning rather than independently demonstrated capabilities.

To feel natural, facial responses must be generated and executed within conversational time windows humans expect, which places demands on sensors, compute, and control software timing.

Why Faces Matter For Human Robot Interaction

Direct Answer: Faces act as the fastest route to social presence. Blinking and gaze convey attention and intent, improving initial engagement in customer service or reception contexts. The benefit is high impact for low physical scope, but it brings ethical and expectation management costs if behavior cannot match appearance.

Benefits Of Facial Expressiveness

A face that blinks and tracks eyes creates immediate psychological presence and improves engagement in settings such as customer service or reception. Designers invest in expressiveness because it is the most effective shortcut for making a machine feel alive in human interactions.

Social Risks And The Uncanny Valley

When appearance promises social intelligence but the underlying system cannot follow through, users experience confusion, distrust, or discomfort. Public reaction to the demo split between admiration and uncanny valley unease, showing how close expressiveness brings payoff and friction.

Concrete Constraints And Tradeoffs

Direct Answer: Concentrating resources on a lifelike head creates at least two hard constraints: increased mechanical failure points with maintenance costs, and strict latency requirements for believable conversational timing. Cost and power needs also limit untethered, long-duration deployment compared to simple mechanical interfaces.

Mechanical Complexity And Maintenance

Twenty five micro motors increase potential failure points and the calibration burden. If micro motors are rated for on the order of tens of thousands to a few hundred thousand cycles, and a face blinks and shifts gaze dozens of times per hour during busy use, actuator cycle counts could rise quickly, making maintenance and parts replacement a dominant operating cost.

Latency And Conversational Timing

Human conversational timing is unforgiving. Perceptible lag in facial response often appears above roughly 150 to 200 milliseconds. If the perception, decision, and actuation loop adds tens to hundreds of milliseconds of latency, the result will not feel natural even when motion interpolation looks smooth.

Cost And Deployment Boundaries

Building compact, high-fidelity expressive heads with many specialized actuators and precise mechanics usually pushes unit cost into at least the thousands of dollars. R and D tooling and iteration tend to reach tens to hundreds of thousands when scaled. Power, cabling, and compute needs make untethered long-duration use more difficult than a demo implies.

AheadForm Origin M1 Versus Alternatives

Direct Answer: Expressive heads prioritize social presence while full humanoid systems prioritize mobility and manipulation. The right choice depends on use case: reception and retail value presence more, while logistics or physical assistance require bodies that can move, lift, or manipulate objects reliably.

Expressive Head Vs Full Humanoid

Expressive heads trade out locomotion and manipulation for scaled facial realism. This pathway reduces mechanical scope and concentrates cost on actuation and control of one region, making it easier to deploy social interfaces but harder to perform physical tasks.

Lifelike Face Vs Clearly Mechanical Interface

A lifelike face can boost engagement, but a clearly mechanical interface may be more dependable and less likely to mislead. Trust often emerges from predictable behavior over time, not from a convincing first impression, so deployment contexts must weigh initial engagement against long-term reliability.

Who This Is For And Who This Is Not For

Who This Is For: Organizations that need immediate social presence in human-facing roles such as greeting, reception, or guided information kiosks, and that can absorb maintenance and disclosure responsibilities.

Who This Is Not For: Applications requiring heavy physical interaction, untethered long-duration operation without frequent servicing, or contexts where clear transparency about machine capability is required and a lifelike face risks misleading users.

What To Watch Next

Two developments will determine whether expressive heads scale usefully. First, whether manufacturers can demonstrate sustained, interactive, and safety-conscious behavior that matches facial realism in real environments. Second, whether standards for disclosure, consent, and deployment emerge for human-like machines in public and private spaces.

Summary And Practical Takeaways

The demo makes a clear point: convincing facial presence is now mechanically feasible and socially striking. AheadForm Origin M1 surfaces the tradeoff between immediate psychological presence and the hidden costs of maintenance, latency, and deployment. Decision makers should treat lifelike faces as maintenance and policy commitments, not cosmetic add-ons.

FAQ

What Is The AheadForm Origin M1?

The Origin M1 is a head unit showcased in a viral clip that demonstrates coordinated blinks, gaze shifts, and subtle facial motions. The footage focuses on facial actuation and does not show speech, full body movement, or live interactive operation.

How Realistic Does The Robot Face Look?

The face appears highly realistic in motion, especially for blinking and gaze. Viewers split between admiration and uncanny valley discomfort, indicating strong perceptual presence but also social friction when behavior may not match appearance.

Does The Origin M1 Walk Or Talk?

No walking or live speech is shown in the demo. AheadForm claims support for synchronized speech in its positioning, but the video itself does not demonstrate walking, autonomous locomotion, or real-time conversational exchanges.

How Many Actuators Does The Head Use?

AheadForm describes the platform as integrating up to 25 micro motors for facial actuation, enabling independent eyelid, gaze, and small muscle-like movements. That actuator density increases mechanical and calibration complexity.

What Are The Main Maintenance Concerns?

Main concerns are actuator wear and calibration. If micro motors are rated for tens of thousands to a few hundred thousand cycles, frequent blinking and gaze shifts in heavy use will drive maintenance, parts replacement, and recalibration as primary operating costs.

Is A Lifelike Face Misleading Or Harmful?

A lifelike face can create ethical questions about deception and manipulation. Deployment will raise scrutiny over disclosure, consent, and appropriate contexts. Trust typically depends on predictable behavior over time, not only convincing appearance.

How Does An Expressive Head Compare To A Full Robot?

Expressive heads prioritize social presence and reduce physical scope, while full robots add mobility and manipulation. The right choice depends on whether the task values interpersonal engagement more than physical interaction and autonomy.

Will These Faces Become Common In Public Spaces?

That is uncertain. Adoption depends on whether perception, safety, long-term reliability, and standards for disclosure catch up with facial realism. Procurement, maintenance, and policy factors will shape real-world deployment decisions.

If you are interested in even more technology-related articles and information from us here at Bit Rebels, then we have a lot to choose from.

COMMENTS