Expensive monthly bills are not caused by clever agents, they are caused by default behavior that quietly bills you every minute of the day.

The real significance here is not swapping to a cheaper model. It is identifying where tokens, API calls, and routing decisions leak value and fixing those leak paths. When you treat models as an expensive resource rather than an unlimited utility, a small set of changes compounds into an order of magnitude improvement.

What becomes obvious when you look closer is how much of running always-on agents is operational housekeeping. Local search, session initialization, free web search, smart routing, and local heartbeats are not theoretical optimizations. They are practical knobs that alter monthly cost by hundreds or thousands of dollars.

This article lays out the five-step playbook, explains why each step matters, and calls out the tradeoffs and constraints you will face when you apply them.

Why OpenClaw Costs Run Away

OpenClaw itself is neutral. The runaway costs come from three predictable sources: excessive context sent to paid models, paid services used for trivial tasks, and blunt routing that sends every request to the priciest model available.

Two concrete behaviors explain most of the cost: pasting whole documents into prompts and retaining session history by default.

Feeding long markdown files, PDFs, or research notes into prompts deposits thousands of billable tokens into every request. Meanwhile, each active session can accumulate many kilobytes of history and, across time, millions of tokens.

Quick Explanation: Excessive token context and undifferentiated routing are the primary drivers of ongoing OpenClaw cost. By treating routine retrieval and heartbeat operations as free or local, you stop billing cycles that compound across sessions.

Five-Step Playbook To Cut Costs

Step 1: Unlock Local Search With qmdskill

Index documents locally and retrieve only the snippets you need. QMDskill, combines BM25 and vector search so your agent can query a local markdown knowledge base and return targeted passages.

The impact is dramatic. Token usage drops by as much as 95 percent and that around 90 percent of research tasks become effectively token-free. In operational terms, expect heavy reductions in per-request token counts and far fewer expensive model calls for retrieval work.

How QMDskill Works

qmdskill builds a searchable index of your markdown or document corpus and uses a mix of lexical relevance and vector similarity to surface tight passages. Instead of sending whole documents, the agent asks QMDSkill for concise context, dramatically reducing billable tokens while preserving retrieval quality.

Step 2: Kill Session History Bloat With Initialization Rules

OpenClaw loads sizeable context by default. An agent could load roughly 50 kilobytes of history on every message, which compounds into millions of tokens per session. The remedy is a session initialization rule in the system prompt that explicitly defines what to load at session start and what to ignore.

After applying this rule, context size drops from around 50 kilobytes to roughly 8 kilobytes and cost per session falls from about 40 cents to around 5 cents. For messaging apps where sessions persist, this change prevents slow, silent cost creep.

Step 3: Replace Paid Web Search With Exa AI

Paid search APIs are convenient but expensive when agents run continuously. Exa AI is a free alternative. Setup takes about 30 seconds: enable the EXA MCP in the developer docs, copy the MCP link, and wrap it into a skill so your agent can perform real-time searches without paid API credits.

For agents that need current events or web references, this removes another recurring line item from the bill without degrading capability.

Step 4: Stop Using A Ferrari To Buy Groceries With Automatic Model Routing

Routing every request to the most expensive model is a textbook inefficiency. It’s recommended to rout layer, using tools like OpenRouter or ClawRouter, that inspects each request and sends it to the cheapest model able to meet the task requirements.

Simple tasks can go to very low cost models, midtier tasks to models like GPT 4.o or Sonnet, and only complex reasoning touches a high-end model such as Opus. The decision happens locally and, crucially, in under a millisecond, so there is no noticeable latency penalty.

Step 5: Route Heartbeat Checks To A Free Local LLM

Agents send heartbeat checks to confirm liveness. If those checks hit paid APIs, the cost adds up. It’s recommended to route heartbeats to a free local LLM such as Ollama. By running a lightweight model locally, heartbeat calls become internal operations that cost nothing in API credits.

Many setups can run a small local model on modest hardware. Once configured, the heartbeat traffic disappears from your bill without changing agent behavior.

Why Each Fix Matters

Local Search Benefit

Local search reduces the tokens sent per request and shifts retrieval work off paid models. That directly lowers per-request billing and prevents repeated reads of large documents, which is where most of the token waste originates.

Session Initialization Benefit

Initializing sessions with a tight system prompt avoids uncontrolled history growth. Smaller contexts mean fewer tokens per message and predictable, auditable session cost profiles that stop slow accumulation of charges.

Routing And Heartbeat Benefit

Smart routing and local heartbeats reassign trivial, repetitive work to cheaper or free systems. The result is fewer high-cost calls and a cost profile that reflects task complexity rather than default safety margins.

Quantified Savings And Tradeoffs

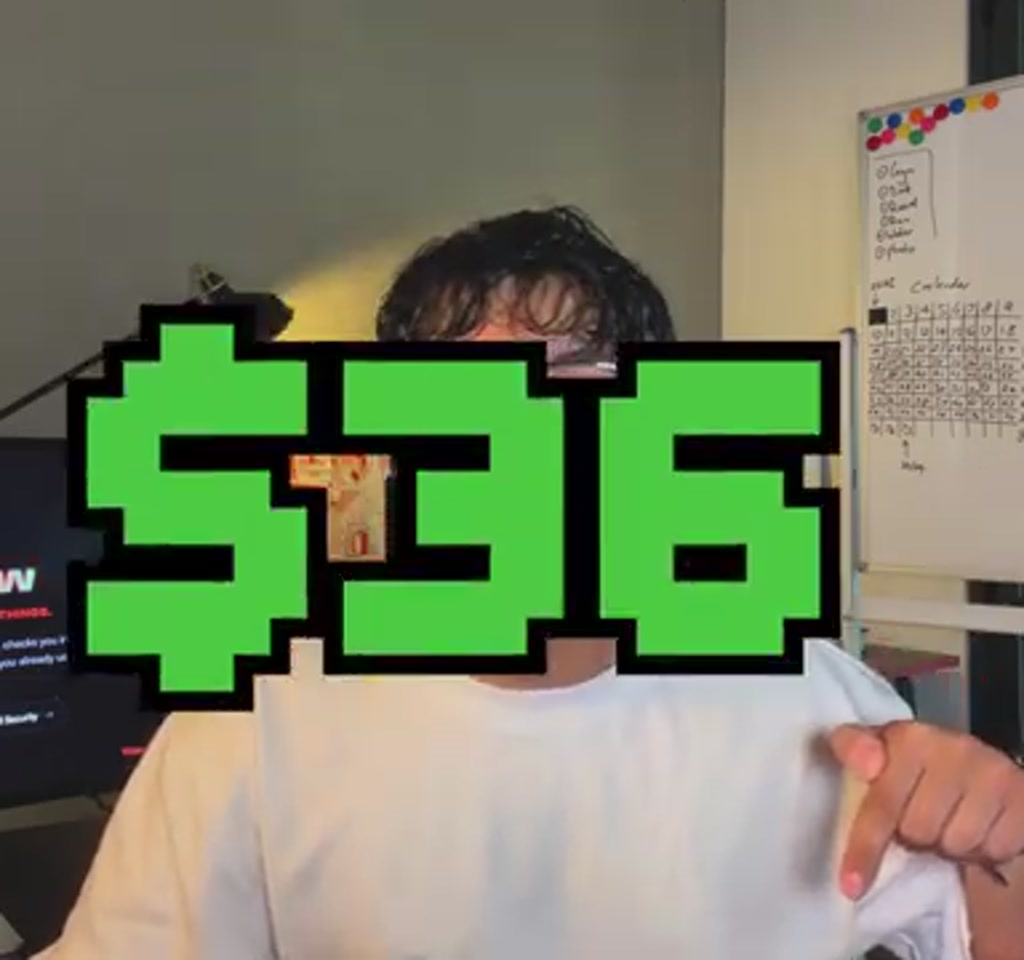

A real OpenClaw deployment dropped from roughly $1,200 per month to about $36 after applying these five changes. That matches the idea that a combination of token reductions and routing choices compound multiplicatively rather than additively.

Two concrete constraints to keep in mind:

- The local search approach requires an indexing and storage cost. Indexing a moderate markdown knowledge base is cheap in compute but it is not free. Expect indexing time and storage overhead on the order of minutes and megabytes to low gigabytes depending on data volume.

- Offloading to local models for heartbeats and trivial checks trades operational complexity for cost. Running a local LLM means periodic updates, occasional model pulls, and some disk usage. For many teams that cost is smaller than recurring API charges, but it is a maintenance commitment.

Additional quantified context helps set expectations:

- Token reductions from local search are reported up to 95 percent, and many common research tasks may become effectively free from the perspective of token billing.

- Session context reduction moved from around 50 kilobytes to about 8 kilobytes, dropping per-session cost from roughly $0.40 to $0.05 in the example provided.

- Heartbeat traffic can translate to thousands of paid API calls per month if left on paid providers, so routing those checks locally removes a recurring burden that often sits unnoticed on invoices.

The tradeoff picture appears when you examine operational friction. Implementing QMDSkill needs a knowledge base and retrieval logic. Model routing requires a routing layer and sensible heuristics for task complexity. Free web search via Exa AI is fast to enable but it changes trust boundaries for external data. Each choice shifts cost into a different bucket: one into engineering time, another into maintenance, and another into storage.

OpenClaw vs Alternatives

OpenClaw paired with qmdskill and local offload emphasizes operational controls rather than model price comparison. Compared to a setup that only swaps models for lower list prices, the playbook reduces recurring token and API charges by changing where and how context is prepared and served.

OpenClaw Compared To Model-Only Cost Cuts

Swapping a model reduces unit price but leaves consumption patterns intact. Cutting context and routing waste produces far larger, sustained gains because it reduces the total billable tokens and calls across the system.

Verification Checklist And Operational Notes

After making changes, verify these behaviors end-to-end. Here’s a clear checklist:

- Research queries hit qmdskill instead of paid models.

- Session context starts small on new conversations.

- Web search uses a free integration, such as Exa AI, successfully.

- Model routing distributes tasks across multiple models based on complexity.

- Heartbeat checks are routed to a local LLM and do not consume paid tokens.

Operational advice that is worth repeating: prioritize the simplest wins first. Local search and session initialization often deliver the biggest return on the least work. Model routing and local heartbeats are next-level optimizations that compound savings.

Practical Monitoring Tips

Track token consumption by endpoint and by session. If a single endpoint or session is responsible for an outsized fraction of tokens, you have an obvious place to apply the session initialization rule or to trim prompt sizes.

Measure model spend per thousand requests before and after routing is enabled. Expect to see a sharp drop in average cost per request if routing is effective.

When This Approach Is Less Effective

This strategy is least useful when every request genuinely requires high-end reasoning on full documents. If your workload is dominated by sustained, large-context reasoning that cannot be reduced via retrieval, cost will remain dominated by premium model usage.

That said, most real-world agent workloads mix retrieval, small checks, and occasional complex reasoning. For those mixes, the approach shifts the cost balance dramatically. The tension remains for pure, large-context reasoning workloads and is discussed further in the forward look below.

A Forward Look At Agent Economics

From an editorial standpoint, the lesson is broad. As agents become embedded in workflows and messaging platforms, the economics of always-on systems will be governed as much by operational discipline as by model price lists. Token management, routing logic, and local offload are becoming first-class cost controls.

There is a subtle cultural shift implied here. Teams that treat model calls as free will run into scaling walls. Teams that instrument and route will convert a hobby cost profile into a sustainable operating model. That is where the real opportunity lies.

For people running OpenClaw today, apply local search first, then lock down session initialization, add free web search, enable automatic routing, and finally offload heartbeats locally. Each step compounds the previous savings.

Who This Is For And Who This Is Not For

Who This Is For: Teams running always-on agents, chatbots, or message-driven automation that incur recurring API charges due to retrieval, session history, or heartbeat traffic. The playbook favors groups able to invest modest engineering time for large monthly savings.

Who This Is Not For: Projects that legitimately require continuous, high-end reasoning across full documents without possibility of retrieval reduction. Also teams unwilling to accept the maintenance cost of local models or additional storage overhead should weigh alternatives.

FAQ

What Is The Main Cause Of High OpenClaw Costs?

Excessive billable tokens and undifferentiated routing. Long prompt contexts and default session history are the most common culprits that silently accumulate costs.

How Much Can QMDskill Reduce Token Usage?

As reported, token reductions up to 95 percent for many research tasks. Results will vary with data shape and query patterns, but local retrieval is described as the largest single lever for lowering token bills.

Can I Replace Paid Web Search With Exa AI Safely?

Exa AI is offered as a free alternative to paid search APIs. It removes recurring search charges but changes where you validate external content. Teams should test for coverage and trust before full substitution.

Does Model Routing Add Noticeable Latency?

Routing decisions can run locally in under a millisecond, producing no noticeable latency for users. Actual performance depends on your routing implementation and infrastructure.

Are Local Heartbeats Hard To Maintain?

Running a small local LLM for heartbeats introduces maintenance tasks such as updates and disk usage. Work is modest compared to recurring API charges, but it is a tradeoff to plan for.

Will These Fixes Work For All Agent Workloads?

They work best when the workload mixes retrieval, small checks, and occasional complex reasoning. Pure, sustained large-context reasoning workloads will see smaller relative gains because the bulk of cost remains in premium model usage.

How Do I Verify Savings After Changes?

Measure token consumption and model spend per endpoint and per session before and after each change. It’s recommended to track the top token consumers and confirm research, routing, and heartbeat behavior end-to-end.

Is The $1,200 To $36 Example Typical?

Your mileage will vary based on usage patterns, data volumes, and the exact models in your stack, but the example demonstrates how multiplicative savings can be when multiple leak paths are closed.

COMMENTS